Algorithms Are Making Government Decisions. The Public Needs to Have a Say.

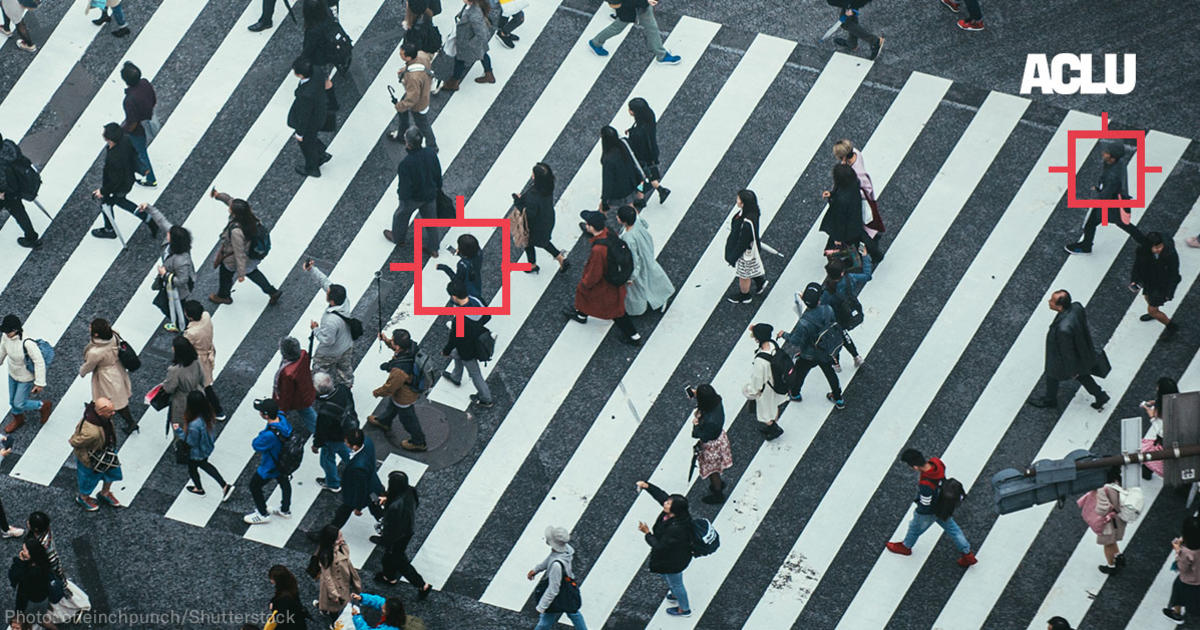

AI and automated decision systems are reshaping core social domains, from and , to and beyond. Yet it remains incredibly difficult to assess and measure the nature and impact of these systems, even as research has shown their potential for biased and inaccurate decisions that harm the most vulnerable. These systems often function in oblique, invisible ways that are not subject to the accountability or oversight the public expects.

Consider how a lack of such public oversight hit the New Orleans community. In 2012, the New Orleans Police Department contracted with the data analytics company Palantir to build a state-of-the-art predictive policing system, designed to help the police identify people in the New Orleans community who are likely to commit violence or become the victim of violence.

The accuracy and usefulness of such predictive policing and “heat mapping” approaches are very much in question. Recent research has that predictive policing has great potential to disparately impact communities of color, amplifying existing patterns of discrimination in policing. has raised doubts about whether predictive policing is effective at all.

The rise of automated decision systems has already had and will continue to have an impact on the most vulnerable people.

This controversial and potentially biased system was put in place with no oversight. Until a report in last month, even members of the New Orleans City Council had no idea what their own police department was doing.

Other jurisdictions are similarly grappling with the lack of oversight over invisible automated systems. Former New York City Council Member James Vacca, sponsor of the legislation forming NYC’s new automated decision system task force, his own lack of insight into how the city is using automated decision technologies as reason for drafting the bill.

This is why we at AI Now released a report on Monday for “Algorithmic Impact Assessments.” AIAs provide a strong foundation on which oversight and accountability practices can be built, by giving policymakers, stakeholders, and the public the means to understand and govern the AI and automated decision systems used by core government agencies.

Algorithmic Impact Assessments would first give the public the basic knowledge it needs through disclosure. Before procuring a new automated decision system, agencies would be required to publicly disclose information on the system’s purpose, reach, and potential impact on legally protected classes of people.

Beyond such disclosure, agencies would also be required to provide an accounting of a system’s workings and impact, including any biases or discriminatory behavior the system might perpetuate. Given the many contexts and many types of systems, this would be accomplished not through a one-size-fits-all audit protocol, but by engaging with external researchers and stakeholders, and ensuring that they have meaningful access to an automated decision system.

These external researchers must include people from a broad array of disciplines and experience. Take, for example, the Allegheny Family Screening Tool, a tool used in Allegheny County, Pennsylvania to help judge the risk that a child might face abuse or neglect. Researchers with different toolsets have yielded on how the tool makes predictions, how employees of the Allegheny Department of Human Service to make decisions, and people subject to those decisions.

Finally, agencies would need to honor the public’s right to due process. This means ensuring that meaningful public engagement is integrated into all stages of the AIA process before, during, and after the assessment through a “notice and comment” process, through which agencies solicit public feedback on their assessments. This would be a chance for the public to raise their concerns and, in some cases, even challenge whether an agency should adopt a particular automated decision system. Additionally, if an agency fails to adequately complete an AIA, or if harms go unaddressed by the agency, the public should have some method of recourse.

In developing AIA legislation, lawmakers will need to address several points. For example, how should external researchers be funded for their efforts? And what should agencies do when private vendors that sell automated decision systems resist transparency? Our position is that vendors should be required to waive their trade secrecy claims on information required for exercising oversight.

The rise of automated decision systems has already had and will continue to have an impact on the most vulnerable people. That’s why communities across the country need far more insight into government’s use of these systems, and far more control over which systems are used to shape their lives.

This piece is part of a series exploring the impacts of artificial intelligence on civil liberties. The views expressed here do not necessarily reflect the views or positions of the ŔĎ°ÄĂĹżŞ˝±˝áąű.

Will Artificial Intelligence Make Us Less Free?

Artificial intelligence is playing a growing role in our lives, in private and public spheres, in ways large and small.

Source: ŔĎ°ÄĂĹżŞ˝±˝áąű

The Rise of Platform Authoritarianism

AI could allow companies to make an end-run around antidiscrimination law.

Source: ŔĎ°ÄĂĹżŞ˝±˝áąű