Buried on page A25 of Thursday‚Äôs New York Times is a tiny story on what‚Äôs likely to become a big problem after the recent horrific mass shooting. According to the report, top intelligence officials in the New York City Police Department met on Thursday to explore ways to identify ‚Äúderanged‚ÄĚ shooters before any attack. One of these tactics would involve ‚Äúcreating an algorithm‚ÄĚ to identify keywords in online public sources indicative of an impending incident. In other words, they seek to build an algorithm to constantly monitor Facebook and Twitter for terms like ‚Äúshoot‚ÄĚ or ‚Äúkill.‚ÄĚ

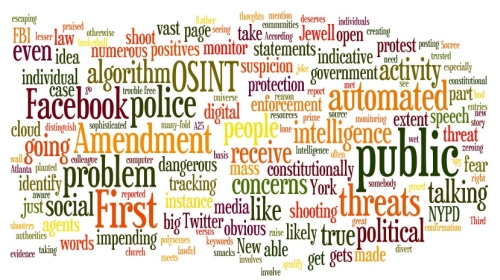

This is not a new idea. It‚Äôs part of what the defense and intelligence communities call ‚Äúopen source intelligence‚ÄĚ or OSINT. And it can raise serious First Amendment concerns, especially when it‚Äôs used domestically and when it involves automated data mining by law enforcement agencies like the NYPD.

At the outset, it’s important to understand exactly what we’re talking about here. This is not tracking when police receive a tip that someone is posting public threats. Nor is it even a police officer taking it upon herself to scour for leads. Here, we’re talking about a computer at the NYPD automatically reading every post on a social networking site and flagging entries with certain words for police scrutiny. This raises numerous constitutional concerns, many obvious and some less so.

First, even when you‚Äôre talking about relatively sophisticated algorithms that, for instance, are able to distinguish between (like ‚Äúshoot‚ÄĚ with a basketball versus a gun), you‚Äôre going to get a vast universe of false positives. Additionally, you‚Äôre also going to get true-false positives‚ÄĒpeople making dumb threats on their Facebook page as, for instance, a joke. To the extent these are ‚Äú‚ÄĚ directed at an individual, they receive lesser First Amendment protection, but ‚Äútrue threats‚ÄĚ are going to be a small subset of the vast amount of idiotic trolling that happens on social media on a daily basis. This problem presents an insurmountable administrative burden, not to mention the fact the digital dragnet will ensnare numerous innocent people.

Second, and aside from these practical concerns, we have a First Amendment right to be free from government monitoring, even when engaged in public activity. Just because an anti-war group meets in a church that is open to the public doesn‚Äôt mean the FBI should be able to spy on them. The same principle applies in the digital ether. The government should need a good reason‚ÄĒspecific to a person‚ÄĒbefore it can go and monitor that person‚Äôs activity. Why? Because if we fear that one peaceful protest is being monitored, we fear they all will be. And, people who would otherwise engage in lawful protest won‚Äôt. It puts a big wet blanket on political discourse.

Third‚ÄĒand my colleague Mike German gets credit for this insight‚ÄĒwhen somebody gets snagged by these dragnets, it‚Äôs very difficult to clear the ‚Äúcloud of suspicion.‚ÄĚ Consider , the late security guard who was initially praised as a hero in the 1996 Atlanta Olympics bombing and then became the prime suspect based, in part, on statements he made to the press. FBI agents, working under the profile of a ‚Äúlone bomber‚ÄĚ who planted the device only in order to heroically find it, reviewed Jewell‚Äôs television appearances and believed they matched. Although the investigation smacks of confirmation bias‚ÄĒagents seeing what they wanted to see‚ÄĒJewell had great trouble escaping the cloud of suspicion. With an algorithm tracking everyone‚Äôs public statements on social media, take that problem and multiply it many-fold.

Finally, there is the very obvious problem that authorities are unlikely to uncover legitimately probative evidence of an impending shooting through automated OSINT. Put another way, it‚Äôs exceedingly rare‚ÄĒand I‚Äôm not aware of a case‚ÄĒwhere a mass murderer clearly announced his or her intention beforehand on YouTube, Facebook or Twitter. Rather, automated OSINT will likely start zeroing in, as indicative of dangerous intent, on indications of mental instability, extreme political views or just weird thoughts. These all qualify as constitutionally protected speech, and, indeed, political speech is often said to receive the highest level of First Amendment protection. (I would say that to the extent that an individual does take to a Facebook wall to issue a credible threat, that should of course be reported.)

All of this is to say that automated OSINT, in addition to being constitutionally problematic, just won’t work. It will divert limited law enforcement resources; focus investigative activity on quacks more than dangerous individuals; and increase the risk that police will miss the true threat, made in private to a trusted confidante, which does deserves swift action to protect the public. It’s a bad idea.