A touting software created by Raytheon to mine data from social networks has been attracting an increasing amount of attention in the past few days, since it was by Ryan Gallagher at the Guardian.

As best as I can tell from the video and Gallagher‚Äôs reporting, Raytheon‚Äôs ‚ÄúRiot‚ÄĚ software gathers up only publicly available information from companies like Facebook, Twitter, and Foursquare. In that respect, it appears to be a conceptually unremarkable, fairly unimaginative piece of work. At the same time, by aspiring to carry out ‚Äúlarge-scale analytics‚ÄĚ on Americans‚Äô social networking data‚ÄĒand to do so, apparently, on behalf of national security and law enforcement agencies‚ÄĒthe project raises a number of red flags.

In the video, we see a demonstration of how social networking data‚ÄĒsuch as Foursquare checkins‚ÄĒis used to predict the schedule of a sample subject, ‚ÄúNick.‚ÄĚ The host of the video concludes,

Six a.m. appears to be the most frequently visited time at the gym. So if you ever did want to try to get ahold of Nick‚ÄĒor maybe get ahold of his laptop‚ÄĒyou might want to visit the gym at 6:00 a.m. on Monday.

(The reference to the laptop is certainly jarring. Remember, this is an application apparently targeted at law enforcement and national security agencies, not at ordinary individuals. Given this, it sounds to me like the video is suggesting that Riot could be used as a way to schedule a black-bag job to plant spyware on someone’s laptop.)

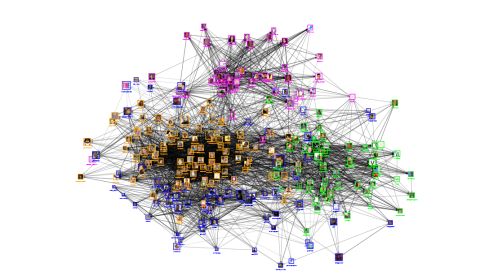

At the end of the video, there’s also a brief visual showing how Riot can use such data to carry out a link analysis of a subject. In link analysis, people’s communications and other connections to each other are mapped out and analyzed. It first came to the attention of many people in and out of government via an influential 2002 by data mining expert Jeff Jonas showing how the 9/11 hijackers might have easily been linked together had the government focused on the two who were already wanted by the authorities. As Jonas in the face of attempts to make too much of this:

Both Nawaf Alhamzi and Khalid Al-Midhar were already known to the US government to be very bad men. They should have never been let into the US, yet they were living in the US and were hiding in plain sight‚ÄĒusing their real names‚Ķ. The whole point of my 9/11 analysis was that the government did not need mounds of data, did not need new technology, and in fact did not need any new laws to unravel this event!

Nevertheless, link analysis appears to have been wholeheartedly embraced by the national security establishment, , and to be justifying unconstitutionally large amounts of data collection on innocent people.

We don‚Äôt know that Raytheon‚Äôs software will ever play any such role‚ÄĒit just appears to aspire to do so. As with any tool, everything depends on how it‚Äôs used. But the fact is, we're living in an age where disparate pieces of information about us are being aggressively mined and aggregated to discover new things about us. When we post something online, it‚Äôs all too natural to feel as though our audience is just our friends‚ÄĒeven when we know intellectually that it‚Äôs really the whole world. Various institutions are gleefully exploiting that gap between our felt and actual audiences (a gap that is all too often worsened by online companies that don‚Äôt make it clear enough to their users who the full audience for their information is). Individuals need to be aware of this and take steps to compensate, such as double-checking their privacy settings and being aware of the full ramifications of data that they post.

At the same time, the government has no business rooting around people's social network postings‚ÄĒeven those that are voluntarily publicly posted‚ÄĒunless it has specific, individualized suspicion that a person is involved in wrongdoing. Among the many problems with government ‚Äúlarge-scale analytics‚ÄĚ of social network information is the prospect that government agencies will blunderingly use these techniques to tag, target and watchlist people coughed up by programs such as Riot, or to target them for further invasions of privacy based on incorrect inferences. The chilling effects of such activities, while perhaps gradual, would be tremendous.

Finally, let me just make the same point we‚Äôve made with regards to privacy-invading technologies such as drones and cellphone and GPS tracking: these kinds of tools should be developed transparently. We don‚Äôt really know what Riot can do. And while we at the ņŌįń√ŇŅ™ĹĪĹŠĻŻ don‚Äôt think the government should be rummaging around individuals‚Äô social network data without good reason, even a person who might disagree with us on that question could agree that it‚Äôs a question that should not be decided in secret. The balance between the intrusive potential of new technologies and government power is one that should be decided openly and democratically.