Algorithms Are Making Decisions ŔĎ°ÄĂĹżŞ˝±˝áąű Health Care, Which May Only Worsen Medical Racism

Artificial intelligence (AI) and algorithmic decision-making systems — algorithms that analyze massive amounts of data and make predictions about the future — are increasingly affecting Americans’ daily lives. People are compelled to include in their resumes to get past AI-driven hiring software. Algorithms are deciding who will get housing or financial loan opportunities. And biased is forcing students of color and students with disabilities to grapple with increased anxiety that they may be locked out of their exams or flagged for cheating. But there’s another frontier of AI and algorithms that should worry us greatly: the use of these systems in medical care and treatment.

The use of AI and algorithmic decision-making systems in medicine are increasing even though current regulation may be insufficient to detect harmful racial biases in these tools. Details about the tools’ development are largely unknown to clinicians and the public — a lack of transparency that threatens to automate and worsen racism in the health care system. Last week, the significantly broadening the scope of the tools it plans to regulate. This broadening guidance emphasizes that more must be done to combat bias and promote equity amid the growing number and increasing use of AI and algorithmic tools.

Bias in Medical and Public Health Tools

In 2019, a found that a clinical algorithm many hospitals were using to decide which patients need care was showing racial bias — Black patients had to be deemed much sicker than white patients to be recommended for the same care. This happened because the algorithm had been trained on past data on health care spending, which reflects a history in which Black patients had less to spend on their health care compared to white patients, due to longstanding wealth and income disparities. While this algorithm’s bias was eventually detected and corrected, the incident raises the question of how many more clinical and medical tools may be similarly discriminatory.

Another algorithm, created to determine how many hours of aid Arkansas residents with disabilities would receive each week, was criticized after to in-home care. Some residents attributed extreme disruptions to their lives and even hospitalization to the sudden cuts. A resulting lawsuit found that several errors in the algorithm — errors in how it characterized the medical needs of people with certain disabilities — were directly to blame for inappropriate cuts made. Despite this outcry, the group that developed the flawed algorithm still creates tools used in health care settings in nearly half of U.S. states as well as internationally.

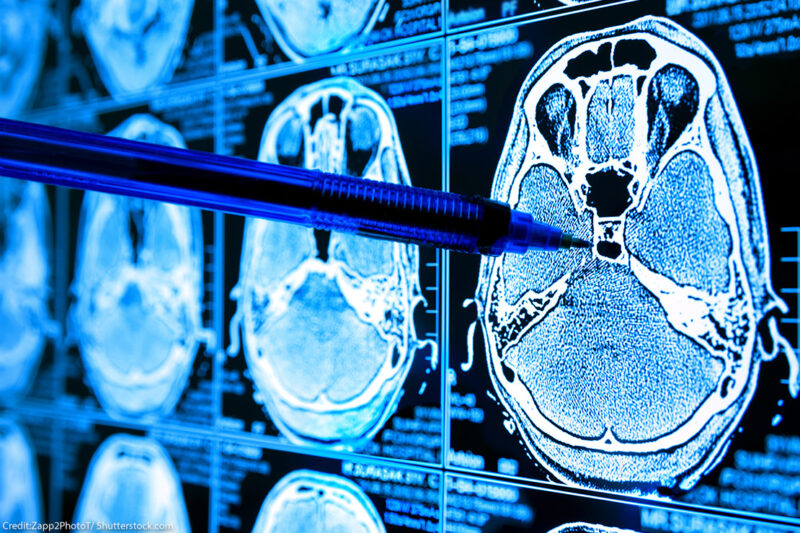

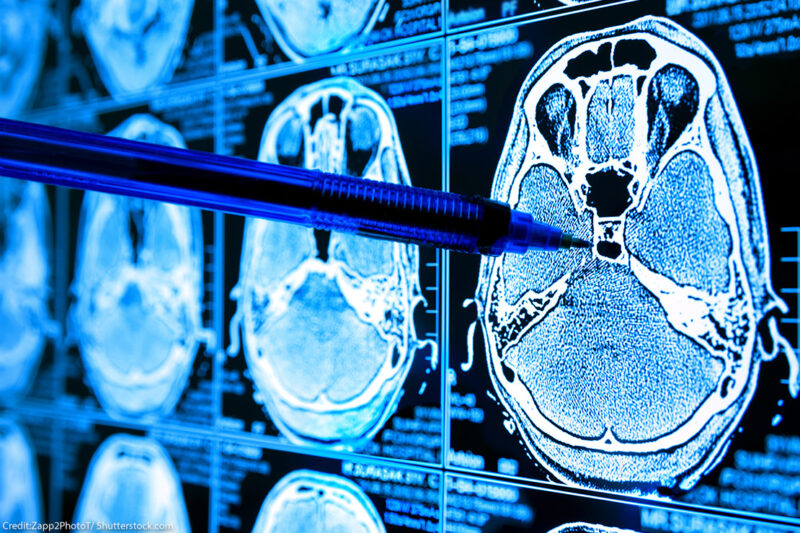

found that an AI tool trained on medical images, like x-rays and CT scans, had unexpectedly learned to discern patients’ self-reported race. It learned to do this even when it was trained only with the goal of helping clinicians diagnose patient images. This technology’s ability to tell patients’ race — even when their doctor cannot — could be abused in the future, or unintentionally direct worse care to communities of color without detection or intervention.

Tools Used in Health Care Can Escape Regulation

Some algorithms used in the clinical space are severely under-regulated in the U.S. The U.S Department of Health and Human Services (HHS) and its subagency the Food and Drug Administration (FDA) are tasked with regulating medical devices — with devices ranging from a tongue depressor to a pacemaker and now, medical AI systems. While some of these medical devices (including AI) and tools that aid physicians in treatment and diagnosis are regulated, other algorithmic decision-making tools used in clinical, administrative, and public health settings — such as those that predict risk of mortality, likelihood of readmission, and in-home care needs — are not required to be reviewed or regulated by the FDA or any regulatory body.

This lack of oversight can lead to biased algorithms being used widely by hospitals and state public health systems, contributing to increased discrimination against Black and Brown patients, people with disabilities, and other marginalized communities. In some cases, this failure to regulate can lead to wasted money and lives lost. One such AI tool, developed to detect sepsis early, is used by more than 170 hospitals and health systems. But a revealed the tool failed to predict this life-threatening illness in 67 percent of patients who developed it, and generated false sepsis alerts on thousands of patients who did not. Acknowledging this failure was the result of under-regulation, the FDA’s new guidelines point to these tools as examples of products it will now regulate as medical devices.

The FDA’s approach to regulating drugs, which involves publicly shared data that is scrutinized by review panels for adverse effects and events contrasts to its approach to regulating medical AI and algorithmic tools. Regulating medical AI presents a novel issue and will require considerations that differ from those applicable to the hardware devices the FDA is used to regulating. These devices include , , and —each of which have been found to reflect racial or ethnic bias in how well they function in subgroups. News of these biases only underscores how vital it is to properly regulate these tools and ensure they don’t perpetuate bias against vulnerable racial and ethnic groups.

A Lack of Transparency/Biased Data

While the FDA suggests that device manufacturers test their devices for racial and ethnic biases before marketing to the general public, this step is not required. Perhaps more important than assessments after a device is developed is transparency during its development. A found many AI tools approved or cleared by the FDA do not include information about the diversity of the data on which the AI was trained, and that the number of these tools being cleared is . Another found AI tools “consistently and selectively under-diagnosed under-served patient populations,” finding the under-diagnosis rate was higher for marginalized communities who disproportionately don’t have access to medical care. This is unacceptable when these tools may make decisions that have life or death consequences.

The Path Forward

Equitable treatment by the health care system is a civil rights issue. The COVID-19 pandemic has laid bare the many ways in which existing societal inequities produce health care inequities — a complex reality that humans can attempt to comprehend, but that is difficult to accurately reflect in an algorithm. The promise of AI in medicine was that it could help remove bias from a deeply biased institution and improve health care outcomes; instead, it threatens to automate this bias.

Policy changes and collaboration among key stakeholders, including state and federal regulators, medical, public health, and clinical advocacy groups and organizations, are needed to address these gaps and inefficiencies. To start, as detailed in a new ŔĎ°ÄĂĹżŞ˝±˝áąű white paper:

- Public reporting of demographic information should be required.

- The FDA should require an impact assessment of any differences in device performance by racial or ethnic subgroup as part of the clearance or approval process.

- Device labels should reflect the results of this impact assessment.

- The FTC should collaborate with HHS and other federal bodies to establish best practices that device manufacturers not under FDA regulation should follow to lessen the risk of racial or ethnic bias in their tools.

Rather than learn of racial and ethnic bias embedded in clinical and medical algorithms and devices from bombshell publications revealing what amounts to medical and clinical malpractice, the HHS and FDA and other stakeholders must work to ensure that medical racism becomes a relic of the past rather than a certainty of the future.