You would think that in the year 2018 our major public agencies would not be relying on mystical tests of truth like Dark Ages witch hunters. But you would be wrong. Wired on Monday published a fascinating on the use of polygraphs, aka тlie detectors,т for job screening by police and other government agencies in the United States.

The piece reviews the science, presents new evidence of the devicesт inherent unreliability, and introduces us to talented and well-credentialed individuals denied jobs in public service due to тfailingт polygraph tests. And it also shows that the technology enables racial bias in hiring.

Lie detection technology has been rejected by scientists for decades, most definitively in a comprehensive by the National Academies of Science that, as Wired put it, judged polygraphs to be тpseudoscientific hokum.т Lie detectors are for use by private employers (with a few very narrow exceptions) and are not admissible as evidence in court. Nevertheless, the law allows use of the devices in government hiring, and, as Wired shows, they are used in hiring decisions not only by national security agencies such as the FBI, CIA, and , but also by numerous state and local police departments.

Wired used records of polygraph use obtained from police departments and several lawsuits to show that not only do тfailureт rates for various supposed lies vary wildly from examiner to examiner, but also that Black people have been тfailedт disproportionately often.

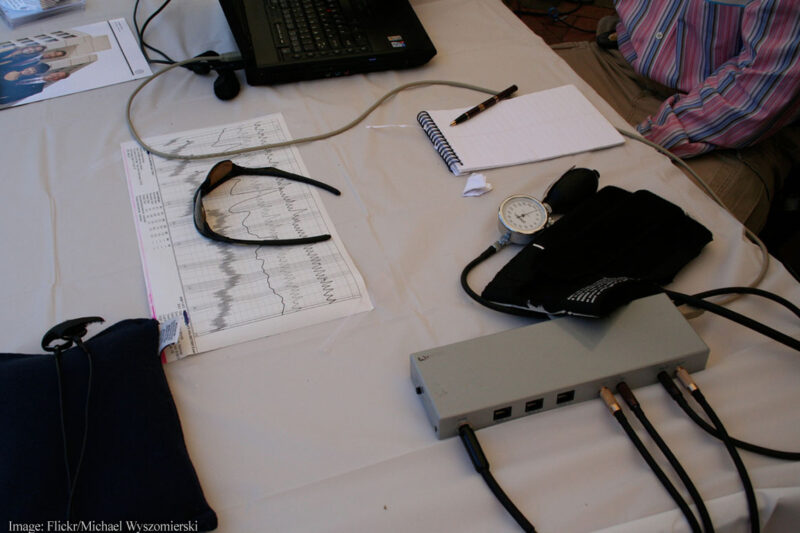

As the article puts it, тA polygraph only records raw data; it is up to an examiner to interpret the data and draw a conclusion about the subjectтs honesty. One examiner might see a blood pressure peak as a sign of deception, another might dismiss it т and it is in those individual judgments that bias can sneak in.т

One neuroscience expert told the magazine, тThe examinerтs decision is probably based primarily on the human interaction that the two people have.т

In this respect polygraphs are just like other pseudo-scientific technologies that weтve seen in recent years: Because they are fundamentally bogus, they end up becoming no more than a vehicle for operators to substitute their own personal assessments of subjects in the absence of genuinely useful measurements. For some operators, thatтs inevitably going to mean racial bias.

This was true, for example, of the TSAтs тSPOTт program, which attempts to train тBehavior Detection Officersт to identify terrorists by chatting with travelers near airport security lines. SPOT is based on searching for supposed тsigns of terrorismт that are vague and commonplace, and т surprise, surprise т have devolved crude racial profiling.

Worse than just creating new avenues for racial bias, programs like these also hide racial bias by couching it behind a veneer of scientific objectivity. In that respect they function in the same way that we fear that opaque AI algorithms will work т taking flawed and biased data, running it through an all-too-human process, and spitting out a result that, though biased, comes packaged as an тobjective computer analysis.т

Weтve been warning for years that vague and unsubstantiated security techniques fuel racial profiling. In 2004, for example, we warned that broad and vague urgings by the authorities to report тsuspiciousт behavior тleave a much wider scope for racial profiling and paranoia directed at anyone who is different or stands out.т

With polygraphs, failure can have lasting negative repercussions in an applicantтs life. Wired reports, тNot only can a failing polygraph test cost you a job, it can also follow you around throughout your career. People who fail a polygraph are usually asked to report that fact if they reapply for law enforcement positions nationwide, and some departments can share polygraph results with other agencies in the same state.т

Todayтs polygraphs are surprisingly similar to those developed many decades ago, but new schemes for supposedly detecting lies are constantly being put forward, including eye tracking, fMRI brain reading, and (of course) AI . None of them have been shown to be reliable in practical settings т for one thing, testing a deviceтs ability to detect highly motivated lying is very difficult to do.

As Iтve discussed, at the РЯАФУХПЊНБНсЙћ our opposition to lie detectors, which dates to the 1950s, has never been exclusively about their ineffectiveness or the specific technology of the polygraph. We have said since the 1970s that even if the polygraph were to pass an acceptable threshold of reliability or a more accurate lie-detection technology were to come along, we would still oppose it because we view techniques for peering inside the human mind as a violation of the Fourth and Fifth Amendments, as well as a fundamental affront to human dignity.

The use of lie detectors in hiring is an example of a larger disturbing trend: the apparent inability of American law enforcement to discriminate between solid science and highly discretionary techniques that, as National Geographic in a disturbing 2016 piece, rely тon methods and interpretations that involve more craft than science.т Among the forensic techniques that a scathing National Academies of Science to rest on uncertain scientific ground are hair analysis, bite-mark analysis, arson analysis (probably used to execute an Texas man in 2004), and other widely relied-upon forensic techniques.

I doubt anyone knows just how much leeway such тcraftт has left for racial bias and other injustices, but I shudder to think about it.