Family Surveillance by Algorithm: The Rapidly Spreading Tools Few Have Heard Of

Last month, police American Idol finalist Syesha Mercadoтs days-old newborn Ast away because she had not reported her daughterтs birth to authorities, while she was still fighting to regain custody of her son from the state. In February 2021, Syesha had taken her 13-month-old son AmenтRa to a hospital because he had difficulty transitioning from breast milk to formula and was refusing to eat. What should have been an ordinary medical visit for a new mom prompted a state-contracted child abuse pediatrician with a known of wrongfully reporting medical conditions as child abuse to call child welfare. Authorities took custody of AmenтRa on the grounds that Syesha had neglected him. Syesha has been reunited with Ast after substantial media attention and public outrage, but continues to fight for the return of AmenтRa.

Meanwhile, it took over a for Erin Yellow Robe, a member of the Crow Creek Sioux Tribe, to be reunited with her children. Based on an unsubstantiated rumor that Erin was misusing prescription pills, authorities took custody of her children and placed them with white foster parents т despite the federal Indian Child Welfare Actтs requirements and the willingness of relatives and tribal members to care for the children.

For white families, these scenarios typically do not lead to child welfare involvement. For Black and Indigenous families, they often lead to years т potentially a lifetime т of ensnarement in the child welfare system or, as some are now more appropriately calling it, the system.

Child Welfare as Disparate Policing

Our countryтs latest reckoning with structural racism has involved critical reflection on the role of the criminal justice system, education policy, and housing practices in perpetuating racial inequity. The family regulation system needs to be added to this list, along with the algorithms working behind the scenes. Thatтs why the РЯАФУХПЊНБНсЙћ has conducted a nationwide survey to learn more about these tools.

Women and children who are , , or experiencing are disproportionately placed under child welfareтs . Once there, and families fare worse than their white counterparts at nearly every critical step. These disparities are partly the legacy of past social practices and government policies that sought to tear apart and families. But the disparities are also the result of the continued policing of women in recent years through child welfare practices, laws, the failed , and other criminal justice policies that punish women who fail to conform to particular conceptions of т.т

Turning to Predictive Analytics for Solutions

Many child welfare agencies have turning to risk assessment tools for reasons ranging from wanting the ability to predict which children are at higher risk for maltreatment to improving agency operations. Allegheny County, Pennsylvania has been using the (AFST) since 2016. The AFST generates a risk score for complaints received through the countyтs child maltreatment hotline by looking at whether certain characteristics of the agencyтs past cases are also present in the complaint allegations. among these characteristics are family member demographics and prior involvement with the countyтs child welfare, jail, juvenile probation, and behavioral health systems. Intake staff then use this risk score as an aide in deciding whether or not to follow up on a complaint with a home study or a formal investigation, or to dismiss it outright.

Like their criminal justice , however, child welfare risk assessment tools do not predict the future. For instance, a measures the odds that a person will be arrested in the future, the odds that they will actually commit a crime. Just as being under arrest doesnтt necessarily mean you did something illegal, a childтs removal from the home, often the target of a prediction model, doesnтt necessarily mean a child was in fact maltreated.

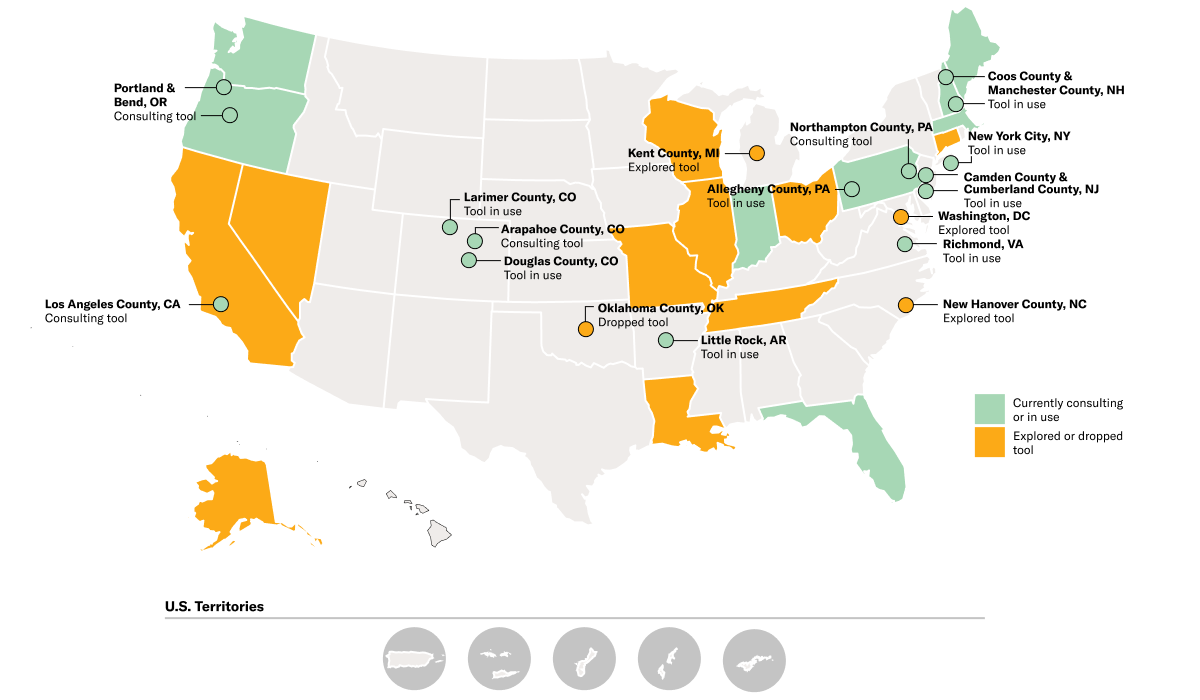

We examined how many jurisdictions across the 50 states, D.C., and U.S. territories are using one category of predictive analytics tools: models that systematically use data collected by jurisdictionsт public agencies to attempt to predict the likelihood that a child in a given situation or location will be maltreated. Hereтs what we found:

- Local or state child welfare agencies in at least 26 states plus D.C. have considered using such predictive tools. Of these, jurisdictions in at least 11 states are currently using them.

- Large jurisdictions like , , and have been using predictive analytics for several years now.

- Some tools currently in use, such as the AFST, are used when deciding whether to refer a complaint for further agency action, while others are used to flag open cases for closer review because the tool deems them to be higher-risk scenarios.

The Flaws of Predictive Analytics

Despite the growing popularity of these tools, few families or advocates have heard about them, much less provided meaningful into their development and use. Yet countless policy choices and value judgments are made in the course of creating and using the tool, any or all of which can impact whether the tool promotes тт or reduces racial disproportionality in agency action.

Moreover, like the tools we have seen in the criminal legal system, any tool built from a jurisdictionтs historical data runs the risk of existing bias. Historically over-regulated and over-separated communities may get caught in a feedback loop that quickly magnifies the biases in these systems. Who decides what тhigh riskт means? When a caseworker sees a тhighт risk score for a Black person, do they respond in the same way as they would for a white person?

Ultimately, we must ask whether these tools are the best way to spend hundreds of thousands, if not millions of dollars, when such funds are urgently needed to help families avoid the crises that lead to abuse and neglect allegations.

What the РЯАФУХПЊНБНсЙћ is Doing

Itтs critical that we interrogate these tools before they become entrenched, as they have in the . Information about the data used to create a predictive algorithm, the policy choices embedded in the tool, and the toolтs impact both system-wide and in individual cases are some of the things that should be disclosed to the public before a tool is adopted and throughout its use. In addition to such transparency, jurisdictions need to make available opportunities to question and contest a toolтs implementation or application in a specific instance if our policymakers and elected officials are to be held accountable for the rules and penalties enforced through such tools.

In this vein, the РЯАФУХПЊНБНсЙћ has requested data from Allegheny County and other jurisdictions to independently evaluate the design and impact of their predictive analytics tools and any measures they may be taking to address fairness, due process, and civil liberty concerns.

Itтs time that all of us ask our local policymakers to end the unnecessary and harmful policing of families through the family regulation system.

Read the full white paper:

Family Surveillance by Algorithm

By Anjana Samant, Aaron Horowitz, Kath Xu, and Sophie BeiersOur countryтs latest reckoning with structural racism has involved critical reflection on the role of the criminal justice system, education policy, and housing practices in perpetuating racial inequity. But another area long overdue for collective reexamination is the child welfare system and the algorithms working

Source: РЯАФУХПЊНБНсЙћ